[ English | español | English (United Kingdom) | Indonesia | Deutsch | русский ]

Netzwerk Architekturen¶

OpenStack-Ansible supports a number of different network architectures, and can be deployed using a single network interface for non-production workloads or using multiple network interfaces or bonded interfaces for production workloads.

The OpenStack-Ansible reference architecture segments traffic using VLANs across multiple network interfaces or bonds. Common networks used in an OpenStack-Ansible deployment can be observed in the following table:

Netzwerk |

CIDR |

VLAN |

|---|---|---|

Verwaltungsnetzwerk |

172.29.236.0/22 |

10 |

Overlay Netzwerk |

172.29.240.0/22 |

30 |

Speichernetzwerk |

172.29.244.0/22 |

20 |

The Management Network, also referred to as the container network,

provides management of and communication between the infrastructure

and OpenStack services running in containers or on metal. The

management network uses a dedicated VLAN typically connected to the

br-mgmt bridge, and may also be used as the primary interface used

to interact with the server via SSH.

The Overlay Network, also referred to as the tunnel network,

provides connectivity between hosts for the purpose of tunnelling

encapsulated traffic using VXLAN, GENEVE, or other protocols. The

overlay network uses a dedicated VLAN typically connected to the

br-vxlan bridge.

The Storage Network provides segregated access to Block Storage from

OpenStack services such as Cinder and Glance. The storage network uses

a dedicated VLAN typically connected to the br-storage bridge.

Bemerkung

The CIDRs and VLANs listed for each network are examples and may be different in your environment.

Additional VLANs may be required for the following purposes:

External provider networks for Floating IPs and instances

Self-service project/tenant networks for instances

Andere OpenStack-Dienste

Netzwerk Schnittstellen¶

Configuring network interfaces¶

OpenStack-Ansible does not mandate any specific method of configuring network interfaces on the host. You may choose any tool, such as ifupdown, netplan, systemd-networkd, networkmanager or another operating-system specific tool. The only requirement is that a set of functioning network bridges and interfaces are created which match those expected by OpenStack-Ansible, plus any that you choose to specify for neutron physical interfaces.

A selection of network configuration example files are given in

the etc/network and etc/netplan for ubuntu systems, and it is

expected that these will need adjustment for the specific requirements

of each deployment.

If you want to delegate management of network bridges and interfaces to

OpenStack-Ansible, you can define variables

openstack_hosts_systemd_networkd_devices and

openstack_hosts_systemd_networkd_networks in group_vars/lxc_hosts,

for example:

openstack_hosts_systemd_networkd_devices:

- NetDev:

Name: vlan-mgmt

Kind: vlan

VLAN:

Id: 10

- NetDev:

Name: "{{ management_bridge }}"

Kind: bridge

Bridge:

ForwardDelaySec: 0

HelloTimeSec: 2

MaxAgeSec: 12

STP: off

openstack_hosts_systemd_networkd_networks:

- interface: "vlan-mgmt"

bridge: "{{ management_bridge }}"

- interface: "{{ management_bridge }}"

address: "{{ management_address }}"

netmask: "255.255.252.0"

gateway: "172.29.236.1"

- interface: "eth0"

vlan:

- "vlan-mgmt"

# NOTE: `05` is prefixed to filename to have precedence over netplan

filename: 05-lxc-net-eth0

address: "{{ ansible_facts['eth0']['ipv4']['address'] }}"

netmask: "{{ ansible_facts['eth0']['ipv4']['netmask'] }}"

If you need to run some pre/post hooks for interfaces, you will need to

configure a systemd service for that. It can be done using variable

openstack_hosts_systemd_services, like that:

openstack_hosts_systemd_services:

- service_name: "{{ management_bridge }}-hook"

state: started

enabled: yes

service_type: oneshot

execstarts:

- /bin/bash -c "/bin/echo 'management bridge is available'"

config_overrides:

Unit:

Wants: network-online.target

After: "{{ sys-subsystem-net-devices-{{ management_bridge }}.device }}"

BindsTo: "{{ sys-subsystem-net-devices-{{ management_bridge }}.device }}"

Single interface or bond¶

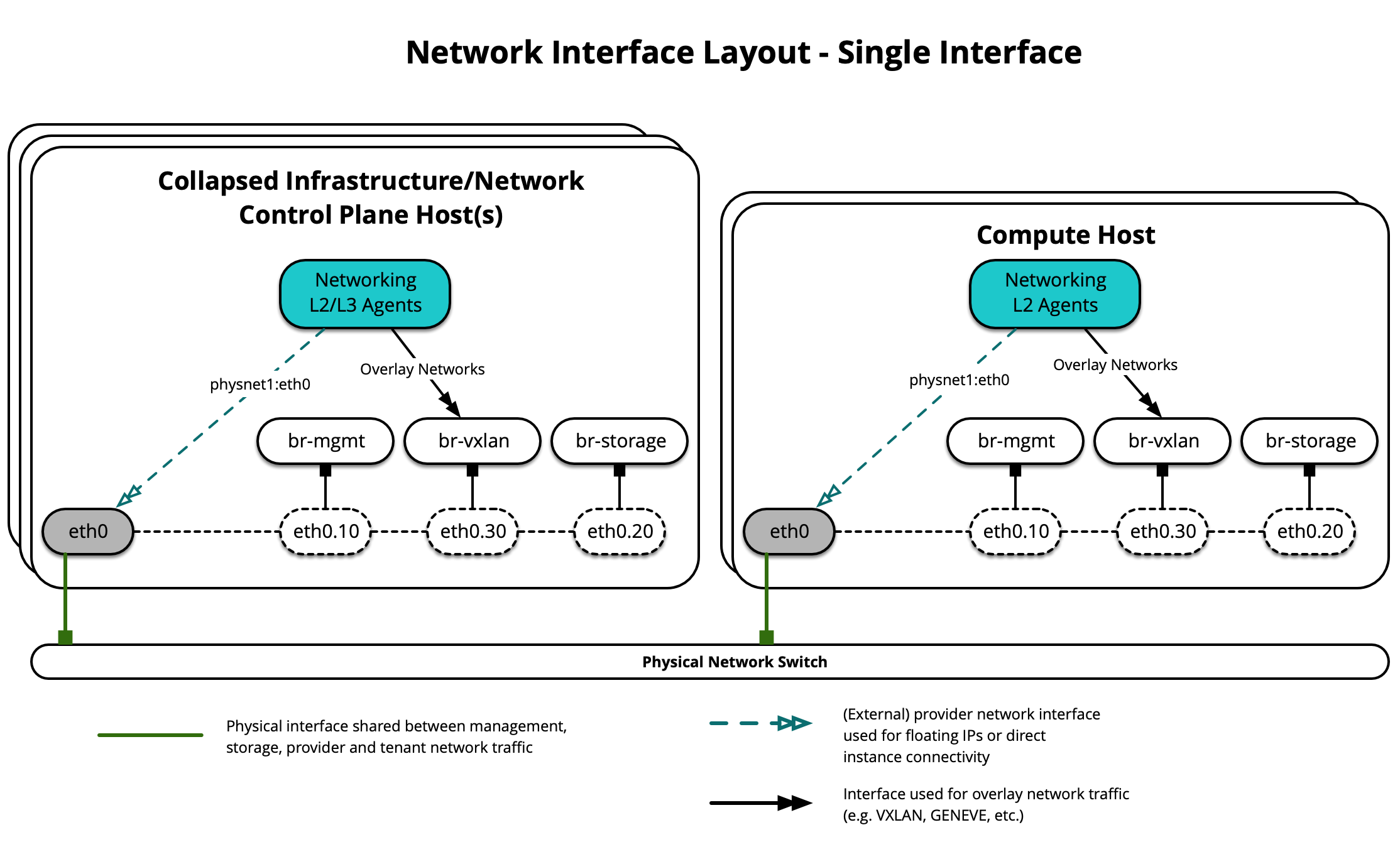

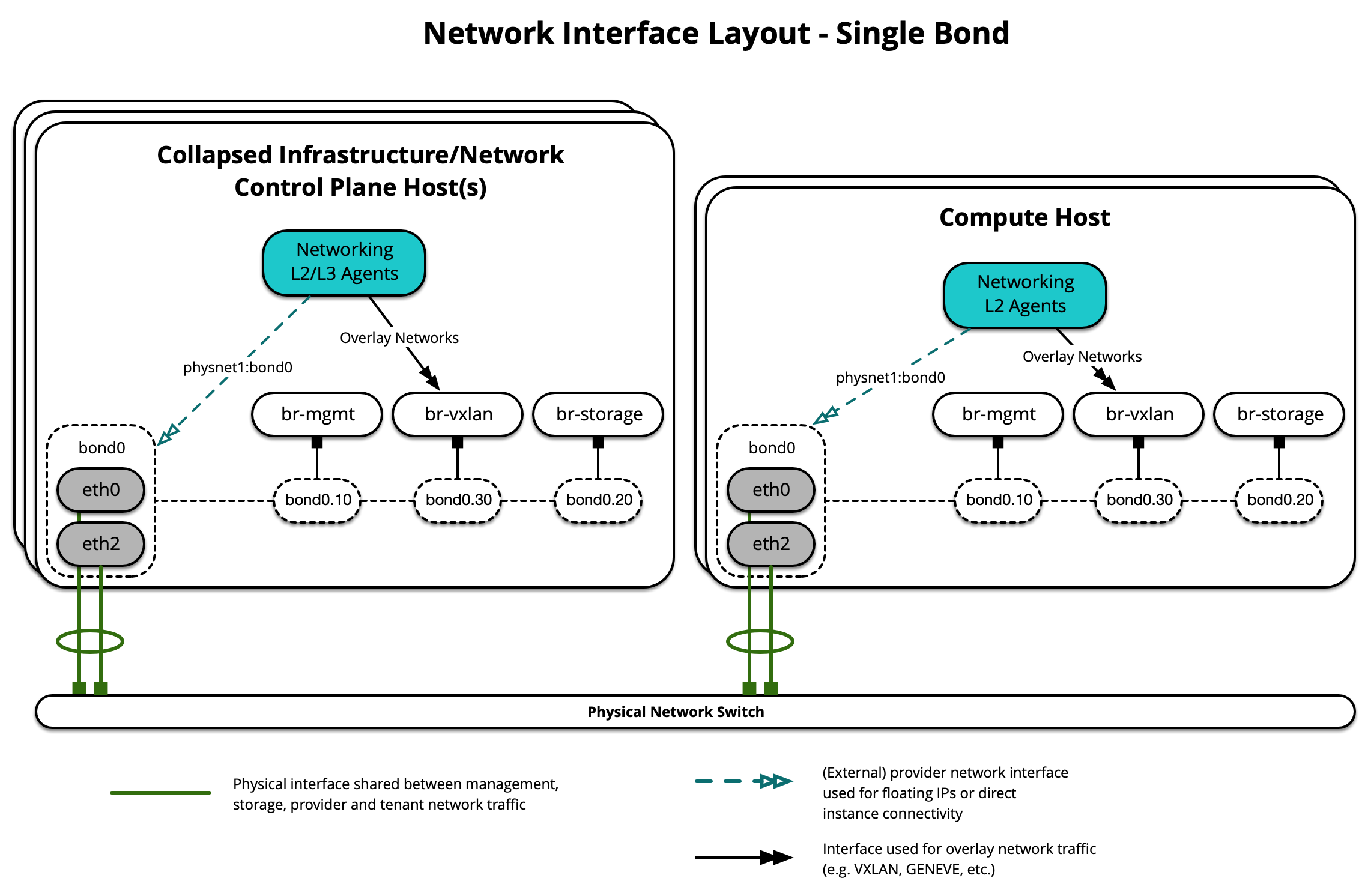

OpenStack-Ansible supports the use of a single interface or set of bonded interfaces that carry traffic for OpenStack services as well as instances.

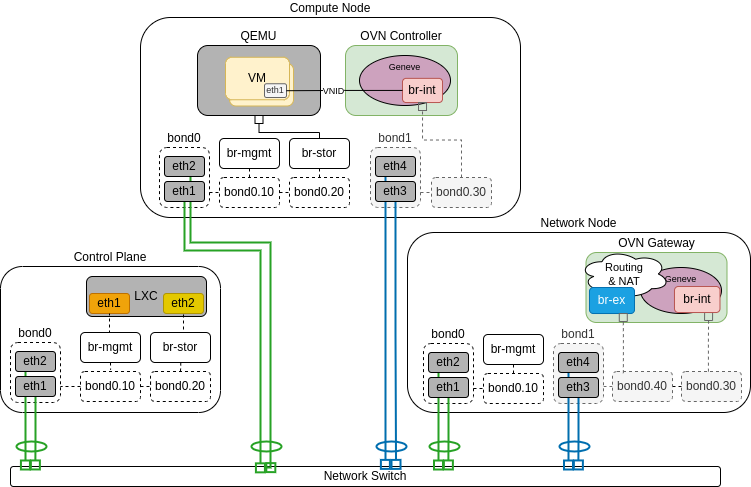

Open Virtual Network (OVN)¶

The following diagrams demonstrate hosts using a single bond with OVN.

In the scenario below only Network node is connected to external network and computes do not have external connectivity, so routers are needed for external connectivity:

The following diagram demonstrates a compute node serving as an OVN gatway. It is connected to the public network, which enables to connect VMs to public networks not only through routers, but also directly:

Open vSwitch and Linux Bridge¶

The following diagram demonstrates hosts using a single interface for OVS and LinuxBridge Scenario:

The following diagram demonstrates hosts using a single bond:

Each host will require the correct network bridges to be implemented.

The following is the /etc/network/interfaces file for infra1

using a single bond.

Bemerkung

Wenn Ihre Umgebung nicht eth0, sondern p1p1 oder einen anderen Namen hat, stellen Sie sicher, dass alle Verweise auf eth0 in allen Konfigurationsdateien durch den entsprechenden Namen ersetzt werden. Gleiches gilt für zusätzliche Netzwerkschnittstellen.

# This is a multi-NIC bonded configuration to implement the required bridges

# for OpenStack-Ansible. This illustrates the configuration of the first

# Infrastructure host and the IP addresses assigned should be adapted

# for implementation on the other hosts.

#

# After implementing this configuration, the host will need to be

# rebooted.

# Assuming that eth0/1 and eth2/3 are dual port NIC's we pair

# eth0 with eth2 for increased resiliency in the case of one interface card

# failing.

auto eth0

iface eth0 inet manual

bond-master bond0

bond-primary eth0

auto eth1

iface eth1 inet manual

auto eth2

iface eth2 inet manual

bond-master bond0

auto eth3

iface eth3 inet manual

# Create a bonded interface. Note that the "bond-slaves" is set to none. This

# is because the bond-master has already been set in the raw interfaces for

# the new bond0.

auto bond0

iface bond0 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

# Container/Host management VLAN interface

auto bond0.10

iface bond0.10 inet manual

vlan-raw-device bond0

# OpenStack Networking VXLAN (tunnel/overlay) VLAN interface

auto bond0.30

iface bond0.30 inet manual

vlan-raw-device bond0

# Storage network VLAN interface (optional)

auto bond0.20

iface bond0.20 inet manual

vlan-raw-device bond0

# Container/Host management bridge

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.10

address 172.29.236.11

netmask 255.255.252.0

gateway 172.29.236.1

dns-nameservers 8.8.8.8 8.8.4.4

# OpenStack Networking VXLAN (tunnel/overlay) bridge

#

# Nodes hosting Neutron agents must have an IP address on this interface,

# including COMPUTE, NETWORK, and collapsed INFRA/NETWORK nodes.

#

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.30

address 172.29.240.16

netmask 255.255.252.0

# OpenStack Networking VLAN bridge

#

# The "br-vlan" bridge is no longer necessary for deployments unless Neutron

# agents are deployed in a container. Instead, a direct interface such as

# bond0 can be specified via the "host_bind_override" override when defining

# provider networks.

#

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0

# compute1 Network VLAN bridge

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

#

# Storage bridge (optional)

#

# Only the COMPUTE and STORAGE nodes must have an IP address

# on this bridge. When used by infrastructure nodes, the

# IP addresses are assigned to containers which use this

# bridge.

#

auto br-storage

iface br-storage inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.20

# compute1 Storage bridge

#auto br-storage

#iface br-storage inet static

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0.20

# address 172.29.244.16

# netmask 255.255.252.0

Multiple interfaces or bonds¶

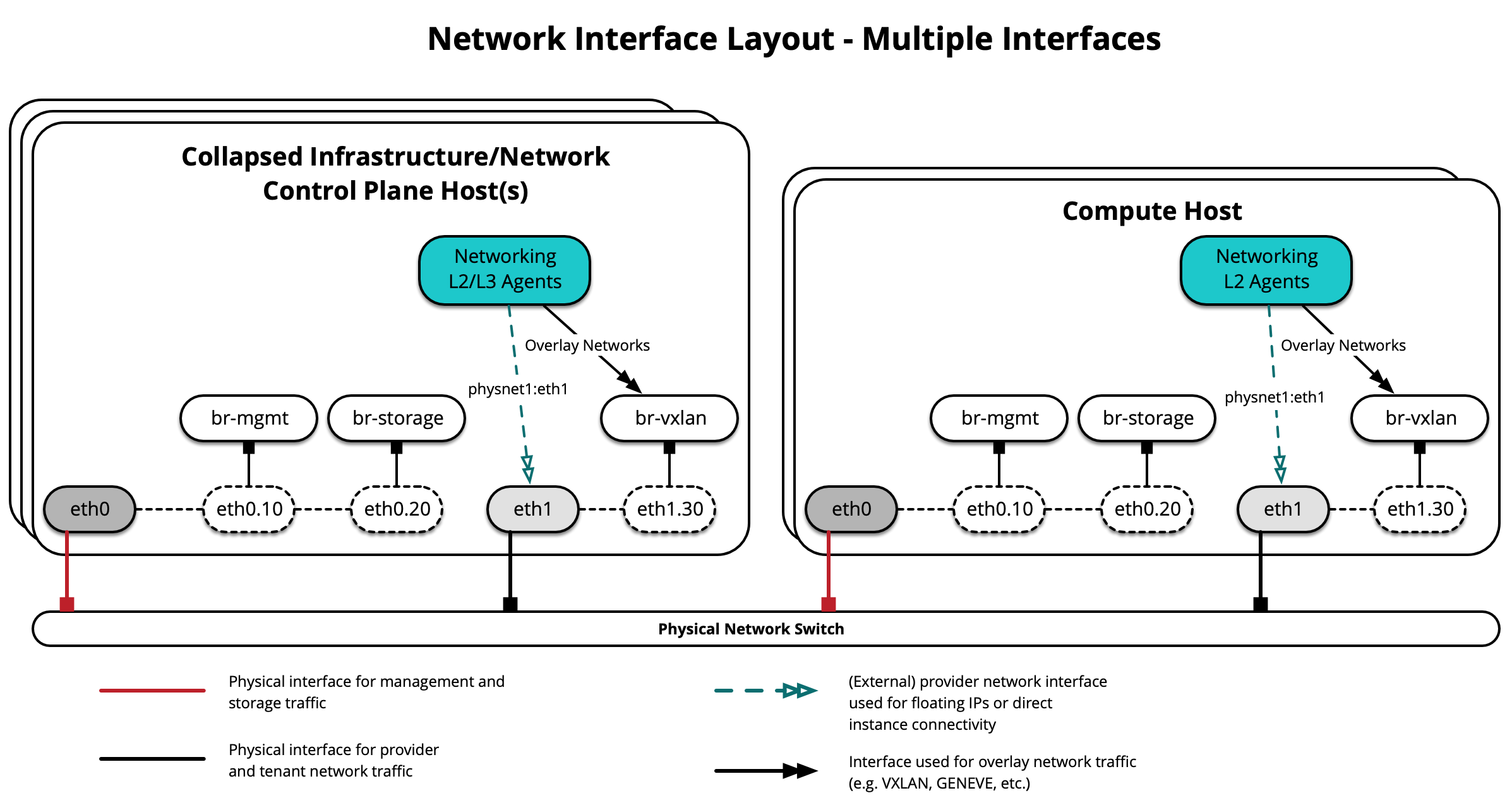

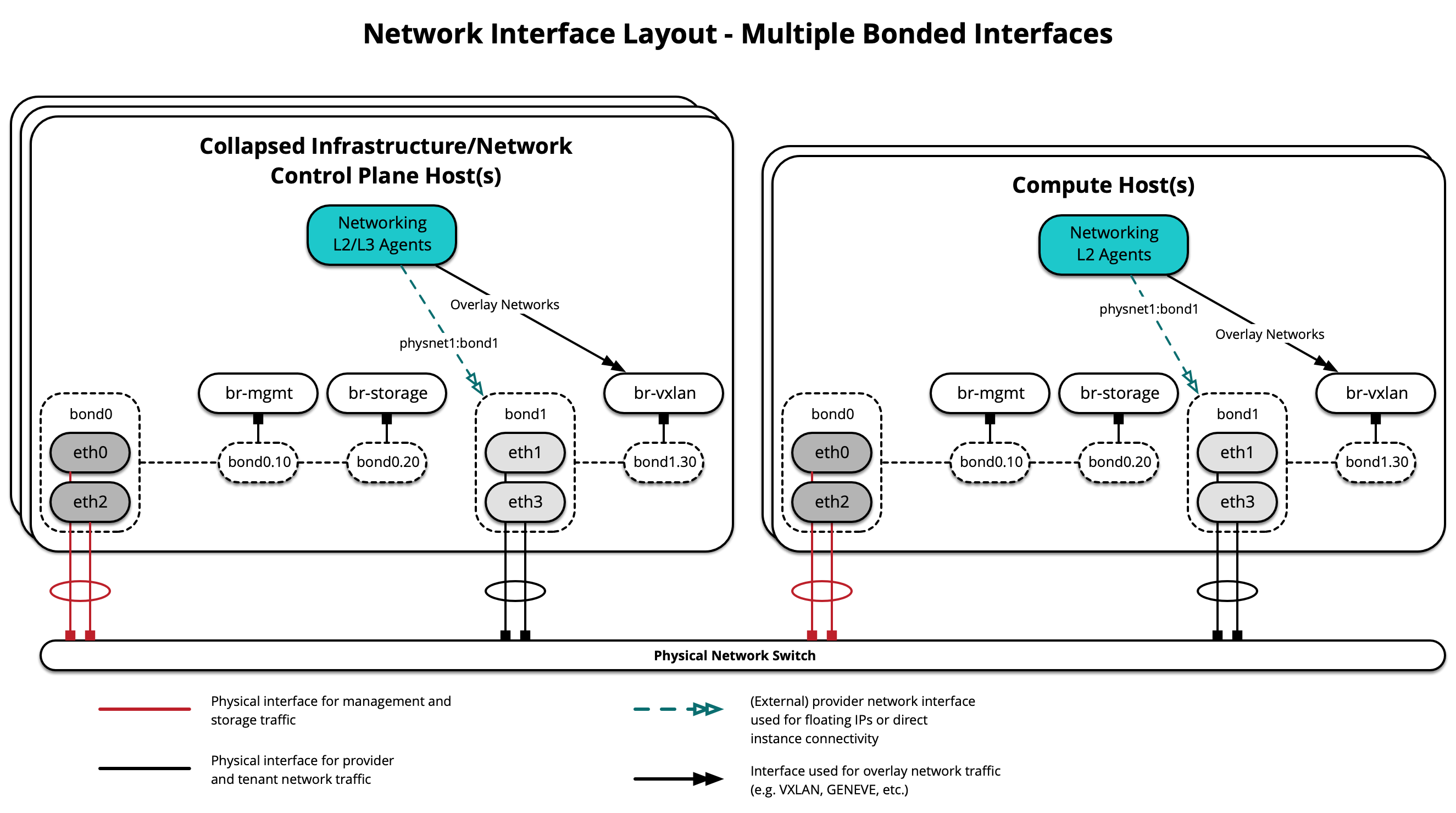

OpenStack-Ansible supports the use of a multiple interfaces or sets of bonded interfaces that carry traffic for OpenStack services and instances.

Open Virtual Network (OVN)¶

The following diagrams demonstrate hosts using multiple bonds with OVN.

In the scenario below only Network node is connected to external network and computes do not have external connectivity, so routers are needed for external connectivity:

The following diagram demonstrates a compute node serving as an OVN gatway. It is connected to the public network, which enables to connect VMs to public networks not only through routers, but also directly:

Open vSwitch and Linux Bridge¶

The following diagram demonstrates hosts using multiple interfaces for OVS and LinuxBridge Scenario:

The following diagram demonstrates hosts using multiple bonds:

Each host will require the correct network bridges to be implemented. The

following is the /etc/network/interfaces file for infra1 using

multiple bonded interfaces.

Bemerkung

Wenn Ihre Umgebung nicht eth0, sondern p1p1 oder einen anderen Namen hat, stellen Sie sicher, dass alle Verweise auf eth0 in allen Konfigurationsdateien durch den entsprechenden Namen ersetzt werden. Gleiches gilt für zusätzliche Netzwerkschnittstellen.

# This is a multi-NIC bonded configuration to implement the required bridges

# for OpenStack-Ansible. This illustrates the configuration of the first

# Infrastructure host and the IP addresses assigned should be adapted

# for implementation on the other hosts.

#

# After implementing this configuration, the host will need to be

# rebooted.

# Assuming that eth0/1 and eth2/3 are dual port NIC's we pair

# eth0 with eth2 and eth1 with eth3 for increased resiliency

# in the case of one interface card failing.

auto eth0

iface eth0 inet manual

bond-master bond0

bond-primary eth0

auto eth1

iface eth1 inet manual

bond-master bond1

bond-primary eth1

auto eth2

iface eth2 inet manual

bond-master bond0

auto eth3

iface eth3 inet manual

bond-master bond1

# Create a bonded interface. Note that the "bond-slaves" is set to none. This

# is because the bond-master has already been set in the raw interfaces for

# the new bond0.

auto bond0

iface bond0 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 200

bond-updelay 200

# This bond will carry VLAN and VXLAN traffic to ensure isolation from

# control plane traffic on bond0.

auto bond1

iface bond1 inet manual

bond-slaves none

bond-mode active-backup

bond-miimon 100

bond-downdelay 250

bond-updelay 250

# Container/Host management VLAN interface

auto bond0.10

iface bond0.10 inet manual

vlan-raw-device bond0

# OpenStack Networking VXLAN (tunnel/overlay) VLAN interface

auto bond1.30

iface bond1.30 inet manual

vlan-raw-device bond1

# Storage network VLAN interface (optional)

auto bond0.20

iface bond0.20 inet manual

vlan-raw-device bond0

# Container/Host management bridge

auto br-mgmt

iface br-mgmt inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.10

address 172.29.236.11

netmask 255.255.252.0

gateway 172.29.236.1

dns-nameservers 8.8.8.8 8.8.4.4

# OpenStack Networking VXLAN (tunnel/overlay) bridge

#

# Nodes hosting Neutron agents must have an IP address on this interface,

# including COMPUTE, NETWORK, and collapsed INFRA/NETWORK nodes.

#

auto br-vxlan

iface br-vxlan inet static

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond1.30

address 172.29.240.16

netmask 255.255.252.0

# OpenStack Networking VLAN bridge

#

# The "br-vlan" bridge is no longer necessary for deployments unless Neutron

# agents are deployed in a container. Instead, a direct interface such as

# bond1 can be specified via the "host_bind_override" override when defining

# provider networks.

#

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond1

# compute1 Network VLAN bridge

#auto br-vlan

#iface br-vlan inet manual

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

#

# Storage bridge (optional)

#

# Only the COMPUTE and STORAGE nodes must have an IP address

# on this bridge. When used by infrastructure nodes, the

# IP addresses are assigned to containers which use this

# bridge.

#

auto br-storage

iface br-storage inet manual

bridge_stp off

bridge_waitport 0

bridge_fd 0

bridge_ports bond0.20

# compute1 Storage bridge

#auto br-storage

#iface br-storage inet static

# bridge_stp off

# bridge_waitport 0

# bridge_fd 0

# bridge_ports bond0.20

# address 172.29.244.16

# netmask 255.255.252.0

Weitere Ressourcen¶

For more information on how to properly configure network interface files and OpenStack-Ansible configuration files for different deployment scenarios, please refer to the following:

For network agent and container networking toplogies, please refer to the following: