Verification reports¶

Rally stores all verifications results in its DataBase so that you can access and process results at any time. No matter what verifier you use, results will be stored in a unified way and reports will be unified too.

We support several types of reports out of the box: HTML, HTML-Static, JSON, JUnit-XML; but our reporting system is pluggable so that you can write your own plugin to build some specific reports or to export results to the specific system (see HowTo add new reporting mechanism for more details`).

HTML reports¶

HTML report is the most convenient type of reports. It includes as much as possible useful information about Verifications.

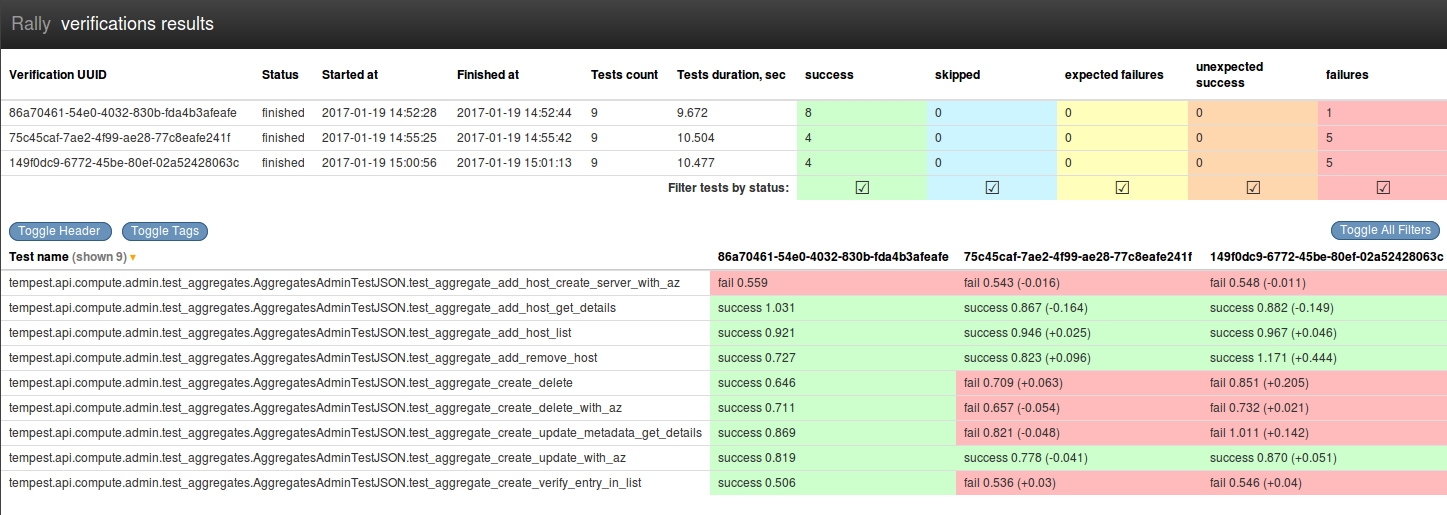

Here is an example of HTML report for 3 verifications. It was generated by next command:

$ rally verify report --uuid <uuid-1> <uuid-2> <uuid-3> --type html \

--to ./report.html

The report consists of two tables.

First one is a summary table. It includes base information about verifications: UUIDs; numbers of tests; when they were launched; statuses; etc. Also, you can find detailed information grouped by tests statuses at the right part of the table.

If the size (height) of the summary table seems too large for you and hinders to see more tests results, you can push "Toggle Header" button.

The second table contains actual verifications results. They are grouped by tests names. The result of the test for particular verification overpainted by one of the next colours:

- Red - It means that test has "failed" status

- Orange - It is "unexpected success". Most of the parsers calculates it just like failure

- Green - Everything is ok. The test succeeded.

- Yellow - It is "expected failure".

- Light Blue - Test is skipped. It is not good and not bad

Several verifications comparison is a default embedded behaviour of reports. The difference between verifications is displayed in brackets after actual test duration. Sign + means that current result is bigger that standard by the number going after the sign. Sign - is an opposite to +. Please, note that all diffs are comparisons with the first verification in a row.

Filtering results¶

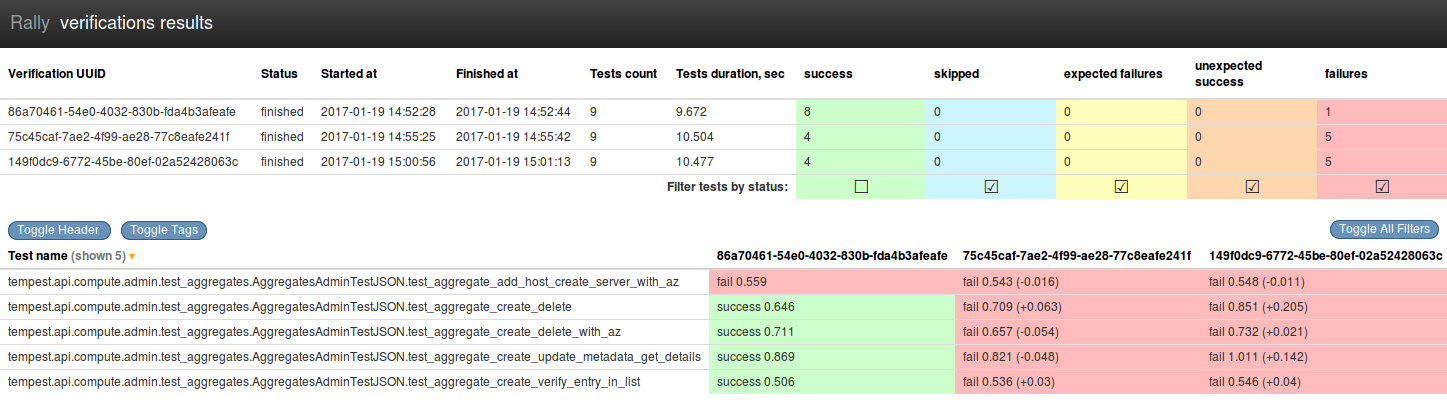

You can filter tests by setting or removing a mark from check box of the particular status column of the summary table.

Tests Tags¶

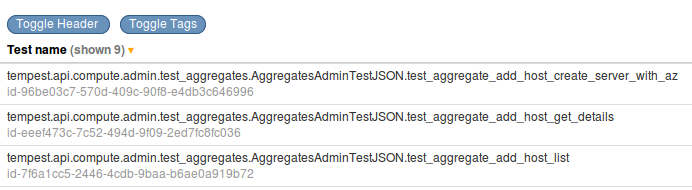

Some of the tests tools support tests tagging. It can be used for setting unique IDs, groups, etc. Usually, such tags are included in test name. It is inconvenient and Rally stores tags separately. By default they are hidden, but if you push "Toggle tags" button, they will be displayed under tests names.

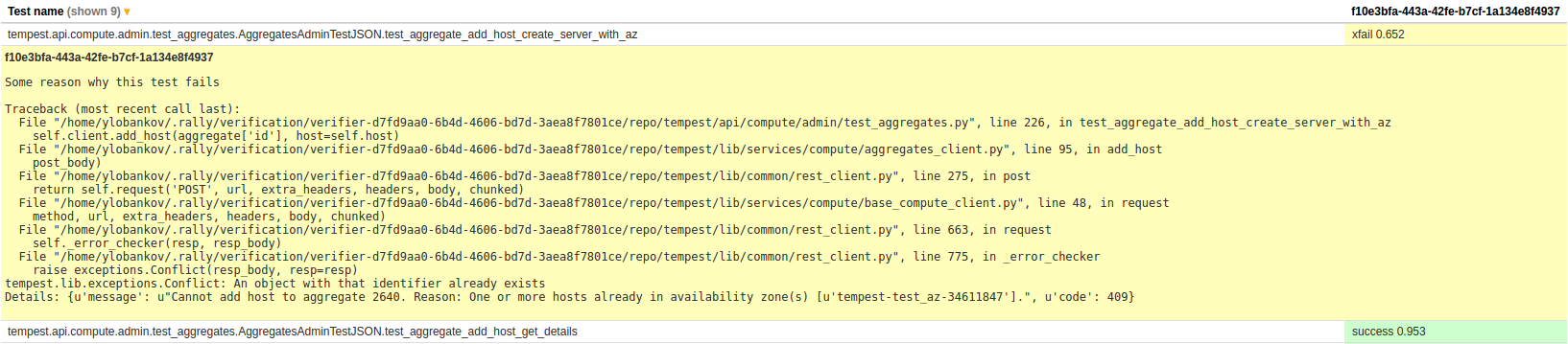

Tracebacks & Reasons¶

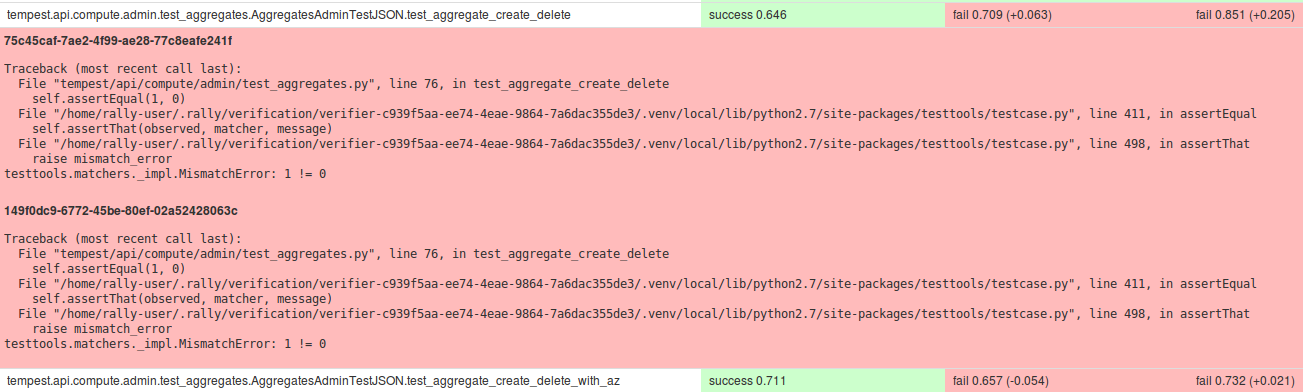

Tests with "failed" and "expected failure" statuses have tracebacks of failures. Tests with "skipped", "expected failure", "unexpected success" status has "reason" of events. By default, both tracebacks and reasons are hidden, but you can show them by clicking on the appropriate test.

Plugins Reference for all out-of-the-box reporters¶

json¶

Generates verification report in JSON format.

An example of the report (All dates, numbers, names appearing in this example are fictitious. Any resemblance to real things is purely coincidental):

{"verifications": { "verification-uuid-1": { "status": "finished", "skipped": 1, "started_at": "2001-01-01T00:00:00", "finished_at": "2001-01-01T00:05:00", "tests_duration": 5, "run_args": { "pattern": "set=smoke", "xfail_list": {"some.test.TestCase.test_xfail": "Some reason why it is expected."}, "skip_list": {"some.test.TestCase.test_skipped": "This test was skipped intentionally"}, }, "success": 1, "expected_failures": 1, "tests_count": 3, "failures": 0, "unexpected_success": 0 }, "verification-uuid-2": { "status": "finished", "skipped": 1, "started_at": "2002-01-01T00:00:00", "finished_at": "2002-01-01T00:05:00", "tests_duration": 5, "run_args": { "pattern": "set=smoke", "xfail_list": {"some.test.TestCase.test_xfail": "Some reason why it is expected."}, "skip_list": {"some.test.TestCase.test_skipped": "This test was skipped intentionally"}, }, "success": 1, "expected_failures": 1, "tests_count": 3, "failures": 1, "unexpected_success": 0 } }, "tests": { "some.test.TestCase.test_foo[tag1,tag2]": { "name": "some.test.TestCase.test_foo", "tags": ["tag1","tag2"], "by_verification": { "verification-uuid-1": { "status": "success", "duration": "1.111" }, "verification-uuid-2": { "status": "success", "duration": "22.222" } } }, "some.test.TestCase.test_skipped[tag1]": { "name": "some.test.TestCase.test_skipped", "tags": ["tag1"], "by_verification": { "verification-uuid-1": { "status": "skipped", "duration": "0", "details": "Skipped until Bug: 666 is resolved." }, "verification-uuid-2": { "status": "skipped", "duration": "0", "details": "Skipped until Bug: 666 is resolved." } } }, "some.test.TestCase.test_xfail": { "name": "some.test.TestCase.test_xfail", "tags": [], "by_verification": { "verification-uuid-1": { "status": "xfail", "duration": "3", "details": "Some reason why it is expected.\n\n" "Traceback (most recent call last): \n" " File "fake.py", line 13, in <module>\n" " yyy()\n" " File "fake.py", line 11, in yyy\n" " xxx()\n" " File "fake.py", line 8, in xxx\n" " bar()\n" " File "fake.py", line 5, in bar\n" " foo()\n" " File "fake.py", line 2, in foo\n" " raise Exception()\n" "Exception" }, "verification-uuid-2": { "status": "xfail", "duration": "3", "details": "Some reason why it is expected.\n\n" "Traceback (most recent call last): \n" " File "fake.py", line 13, in <module>\n" " yyy()\n" " File "fake.py", line 11, in yyy\n" " xxx()\n" " File "fake.py", line 8, in xxx\n" " bar()\n" " File "fake.py", line 5, in bar\n" " foo()\n" " File "fake.py", line 2, in foo\n" " raise Exception()\n" "Exception" } } }, "some.test.TestCase.test_failed": { "name": "some.test.TestCase.test_failed", "tags": [], "by_verification": { "verification-uuid-2": { "status": "fail", "duration": "4", "details": "Some reason why it is expected.\n\n" "Traceback (most recent call last): \n" " File "fake.py", line 13, in <module>\n" " yyy()\n" " File "fake.py", line 11, in yyy\n" " xxx()\n" " File "fake.py", line 8, in xxx\n" " bar()\n" " File "fake.py", line 5, in bar\n" " foo()\n" " File "fake.py", line 2, in foo\n" " raise Exception()\n" "Exception" } } } } }

Namespace: default

junit-xml¶

Generates verification report in JUnit-XML format.

An example of the report (All dates, numbers, names appearing in this example are fictitious. Any resemblance to real things is purely coincidental):

<testsuites> <!--Report is generated by Rally 0.8.0 at 2002-01-01T00:00:00--> <testsuite id="verification-uuid-1" tests="9" time="1.111" errors="0" failures="3" skipped="0" timestamp="2001-01-01T00:00:00"> <testcase classname="some.test.TestCase" name="test_foo" time="8" timestamp="2001-01-01T00:01:00" /> <testcase classname="some.test.TestCase" name="test_skipped" time="0" timestamp="2001-01-01T00:02:00"> <skipped>Skipped until Bug: 666 is resolved.</skipped> </testcase> <testcase classname="some.test.TestCase" name="test_xfail" time="3" timestamp="2001-01-01T00:03:00"> <!--It is an expected failure due to: something--> <!--Traceback: HEEELP--> </testcase> <testcase classname="some.test.TestCase" name="test_uxsuccess" time="3" timestamp="2001-01-01T00:04:00"> <failure> It is an unexpected success. The test should fail due to: It should fail, I said! </failure> </testcase> </testsuite> <testsuite id="verification-uuid-2" tests="99" time="22.222" errors="0" failures="33" skipped="0" timestamp="2002-01-01T00:00:00"> <testcase classname="some.test.TestCase" name="test_foo" time="8" timestamp="2001-02-01T00:01:00" /> <testcase classname="some.test.TestCase" name="test_failed" time="8" timestamp="2001-02-01T00:02:00"> <failure>HEEEEEEELP</failure> </testcase> <testcase classname="some.test.TestCase" name="test_skipped" time="0" timestamp="2001-02-01T00:03:00"> <skipped>Skipped until Bug: 666 is resolved.</skipped> </testcase> <testcase classname="some.test.TestCase" name="test_xfail" time="4" timestamp="2001-02-01T00:04:00"> <!--It is an expected failure due to: something--> <!--Traceback: HEEELP--> </testcase> </testsuite> </testsuites>

Namespace: default