Pluggable Publish-Subscribe Infrastructure¶

This document describes the pluggable API for publish-subscribe and publish-subscribe drivers. For the design, see the spec publish_subscribe_abstraction.

Instead of relying on the DB driver to support reliable publish-subscribe, we allow pub/sub mechanisms to be integrated to Dragonflow in a pluggable way.

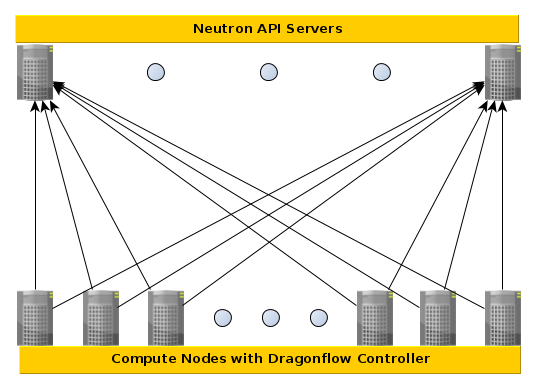

There are several Neutron API servers, and many compute nodes. Every compute node registers as a subscriber to every Neutron API server, which acts as a publisher.

This can be seen in the following diagram:

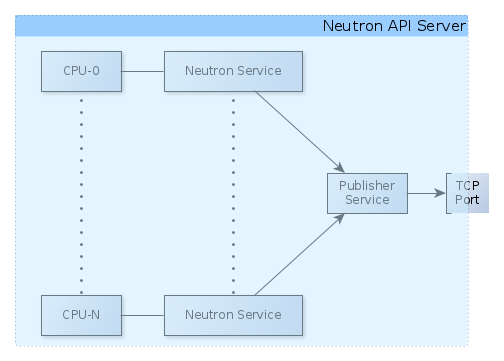

Additionally, the Neutron server service is forked per the number of cores on the server.

Since some publishers need to bind to a TCP socket, and we will want to run monitoring services that need to run only once per server, and not once per core, we provide a publisher service.

Therefore the communications between the Neutron service and the publisher service requires an inter-process communications (IPC) solution.

This can also be solved using a publish-subscribe mechanism.

Therefore, there are two publish-subscribe implementations - a network-based implementation between Neutron server and Compute node, and an IPC-based implementation between Neutron services and the publisher service.

API¶

For simplicity, the API for both implementations is the same. It can be found

in dragonflow/db/pub_sub_api.py (Link).

It is recommended to read the code to fully

understand the API.

For both network and IPC based communication, a driver has to implement

dragonflow.db.pub_sub_api.PubSubApi (Link). In both cases,

get_publisher and get_subscriber return a

dragonflow.db.pub_sub_api.PublisherApi and a

dragonflow.db.pub_sub_api.SubscriberApi, respectively.

The class dragonflow.db.pub_sub_api.SubscriberAgentBase provides a starting

point for implementing subscribers. Since the publisher API only requires an

initialisation and event-sending method, both very implementation specific, no

such base class is provided.

Configuration¶

The following parameters allows configuration of the publish-subscribe

mechanism. Only parameters which need to be handled by the publish-subscribe

drivers are listed here. For a full list, refer to

dragonflow/conf/df_common_params.py (Link).

- pub_sub_driver - The alias to the class implementing

PubSubApifor network-based pub/sub.- publisher_port - The port to which the network publisher should bind. It is also the port the network subscribers connect.

- publisher_transport - The transport protocol (e.g. TCP, UDP) over which pub/sub netwrok communication is passed.

- publisher_bind_address - The local address to which the network publisher should bind. ‘*’ means all addresses.

Some publish-subscribe drivers do not need to use a publisher service.

This can be the case if e.g. the publisher does not bind to the communication socket.

All publishers are created using the pub_sub_driver.

Reference Implementation¶

ZeroMQ is used as a base for the reference implementation.

The reference implementation can be found in

dragonflow/db/pubsub_drivers/zmq_pubsub_driver.py (Link).

- In it, there are two implementations of

PubSubApi: - ZMQPubSub - For the network implementation

- ZMQPubSubMultiproc - For the IPC implementation.

In both cases, extensions of ZMQPublisherAgentBase and

ZMQSubscriberAgentBase are returned.

In the case of subscriber, the only difference is in the implementation of

connect. Since the IPC implementation connects on ZMQ’s ipc protocol, and

the network implementation connects over the transport protocol provided via

publisher_transport.

In the case of the publisher, the difference is both in the implementation of

initialize, _connect, and send_event. The difference in connect is

for the same reasons as the subscribers. The difference in initialize is

since the multi-proc subscriber uses the lazy initialization pattern. This also

accounts for the difference in send_event.

References¶

[spec] https://docs.openstack.org/dragonflow/latest/specs/publish_subscribe_abstraction.html

[pub_sub_api.py] https://github.com/openstack/dragonflow/tree/master/dragonflow/db/pub_sub_api.py

[df_common_params.py] https://github.com/openstack/dragonflow/blob/master/dragonflow/conf/df_common_params.py

[zmq_pubsub_driver.py] https://github.com/openstack/dragonflow/tree/master/dragonflow/db/pubsub_drivers/zmq_pubsub_driver.py

Except where otherwise noted, this document is licensed under Creative Commons Attribution 3.0 License. See all OpenStack Legal Documents.